A brief introduction to 3D (and how to make money with it)

Real time 3D has been part of software ecosystems since the early 1990s, mainly pushed by the video game industry. The release of the first PlayStation in 1994 brought 3D to the public. Then its development continued to accelerate, thanks to the rise of the personal computer and the explosion of graphics processors in the 2000s.

Since the 2010s, we have seen a stagnation of rendering quality but a development of use cases and market diversification. From mobile devices to workstation, passing by virtual reality (VR) and augmented reality headset, this technology is no longer only dedicated to the entertainment industry, but is more and more present in scientific domains, energy, and the defense industry.

Some use cases

In addition to video games which is clearly the principal domain where 3D is used, we can identify three main use cases:

Serious gaming

Directly inspired by the gaming industry, serious gaming reuses the codes of video gaming applied to training or simulation of a scenario. Let’s see a few examples:

Driving simulations: From driving school to flight training, passing by racing driver training or construction machines, driving simulator make it possible to cumulate lots of training hours without the need of expensive material. Furthermore, emergency scenarios can be simulated without any risk.

Scenario planification: Here, the goal is to simulate a full scenario implying several people or actors. The goal is not really to train a single operator doing technical moves, but to simulates interaction and progress of a situation. It can be simulation of a crowd in a public place or coordination and interaction of emergency services during a catastrophe.

Factory simulation: Design your factory, simulation workstation ergonomic, try new layout for supply chain, repeat maintenance and dismantling operations even before the first bricks were laid. That’s the goal of what is now called “digital twin”. The same way the International Space Station has its twin on earth to repeat operation, your factory can have a digital twin to simulate any possible operation and failure.

A digital twin can be used during the full lifetime of the product, from its design to its scrapping. Thanks to this, operators can be trained even before the object has been build, making them ready for the inauguration. Maintenance operation can be simulated without stopping operations. Emergency situations can be simulated and repeated without any risk and dismantling can be anticipated long before date of termination.

Marketing

3D is also used for marketing purpose to attract attention. During exhibitions, when you are surrounded by dozens or even hundreds of other companies, you really have a fraction of time to engage potential customers and gain their interest in your product. Today, you can create posters or video with 3D rendering that highlights your product. You can create these in three ways: The media is completely artificial and created with design software:

The media is created with the application render engine but using artificial data, or prototype not included in commercial applications:

Prototype of trajectory visualization

The media is created with the commercial application, but the staging is done only for the purpose of having a beautiful result but may have little value from a business perspective.

DataViz

Finally, 3D is used in data visualization to help the user understand a set of data.

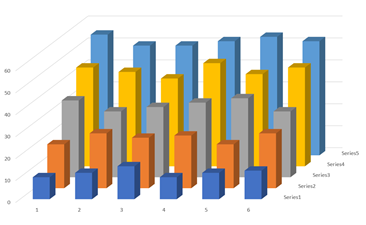

There was a trend in the late 1990s fueled by “3D Chart feature” present in famous office software:

This is fine for marketing, but it’s not data visualization. It creates a nice effect, but it makes data interpretation difficult.

Putting 3D in data visualization should help the user to create a mental map of the data. The goal is not to make data more beautiful but using the natural ability of our brain to think in 3D, to transform raw data into a model, into knowledge, and finally, into a solution.

Let’s see an example. Here are two representations of the same data: a 400*400 spreadsheet representing some physical phenomena. On both, data are represented as what is called a “heat map”. Color indicates weather values are high or low. But on the right, we also map the color on a 3D surface. This representation may help your brain to better understand the topology of data.

We previously talked about an isolated dataset. But 3D Data representation is very useful to understand data in their context, like in life science, material science, or the energy industry, where they represent something in the real world. You can represent a catheter in an organ in case of surgical operation or represent a well in a seismic dataset.

Technologies

When dealing with 3D rendering in software development, it is essential to choose the right technology from the outset.

On the foundation lies graphical APIs. These APIs are low level and generally hardware dependent and can handle basic primitives like triangles, lines, and points.

Then comes render engines. They could be generic or dedicated to a domain. They take care of hardware and low-level issues so that developers can focus on business logic. These engines can handle directly complex objects depending on their favorite area: sphere, cylinders, mesh, assembly, tensor fields, streamlines, etc.

On the highest level are the end-user applications. Petrel E&P software platform and Techlog wellbore software platform can be seen as 3D applications in some extent. Other good examples are computer aided design (CAD) applications.

Effects

Creating a scene for a movie requires lot of special effects. Actors, makeups, set, lights, fans, smoke…

It’s the same for 3D. You start with a base color, then apply lighting, shadowing, depth of field, and lots of other effects to finally have the final image:

The same dataset with different effects

And like for cinema, you don’t always need all the effects.

Some movies have meticulous staging, but not so many special effects. On the other ends, many blockbuster movies bet much more on the visual aspect.

When doing DataViz, every detail counts. Your 3D scene must contain enough effects to be naturally understood, but not too many to avoid polluting data.

“Is this small spot a part of the data or a reflection caused by lighting?”

If the user asks this question, you pushed effects too far. However, you can add effects that help data interpretation.

When creating marketing material, you want to grab attention. Data interpretation goes into background and most of the time, you create snapshots or videos, so you can cheat on performances in favor of rendering quality.

Serious gaming is somewhere in between. Studies have shown that you don’t need photo-realistic rendering to feel part of the game. Rendering quality may improve immersion feeling, but this feeling is already present even with poor graphics.

However, you do need good frame rate and interactivity, even more when using a VR headset. Decreasing rendering complexity to achieve a better framerate is totally acceptable in this case. Client-side/server-side rendering

Since a few years, with the development of cloud-based applications and the rise of network bandwidth has emerged the notion of client-side and server-side rendering. Let’s see what’s behind these terms.

Client-side rendering is the traditional rendering we have all been experiencing for the past 20 years.

Rendering is done inside the application by the user’s computer.

Pros:

-

Simple to put in place.

-

No need of infrastructure and network.

-

It will scale with number of users, as it is the user that provides the hardware.

Cons:

-

Compatibility issue with user’s hardware.

-

User has to buy new hardware to scale or follow technology evolution.

-

Data needs to be sent to user machine, which may cause security concerns.

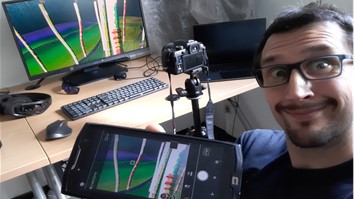

With server-side rendering, the rendering is done by a machine and the resulting video is streamed to a client device. It is like putting a camera in front of your computer and streaming video to your smartphone:

Pros:

-

No hardware compatibility issues as you own the hardware.

-

All users benefit from hardware upgrades.

-

Data stays on server. Only video is sent to user.

-

Bring high quality rendering to low performances devices.

Cons:

-

Requires robust infrastructure and reliable network.

-

You must scale infrastructure with the number of users.

-

Requires extra development work compared to stand alone application.

Conclusion

3D has been pushed by the entertainment industry for decades, but other industries can now benefit from all the technological advances made over this time. When it took several years to develop a 3D based software in the 2000s, it now takes just a few days to have a working prototype using high level tools. When a VR headset cost the price of a car 10 years ago, you can now find powerful devices for a few hundred dollars. Your development teams can now focus on what matters: adding business value to your applications, and no longer spending time implementing all the logic behind 3D rendering.

Katja Zibrek, Sean Martin, Rachel McDonnell – 2019 - Is Photorealism Important for Perception of Expressive Virtual Humans in Virtual Reality?