Autonomous Operations - Age of Artificial Intelligence

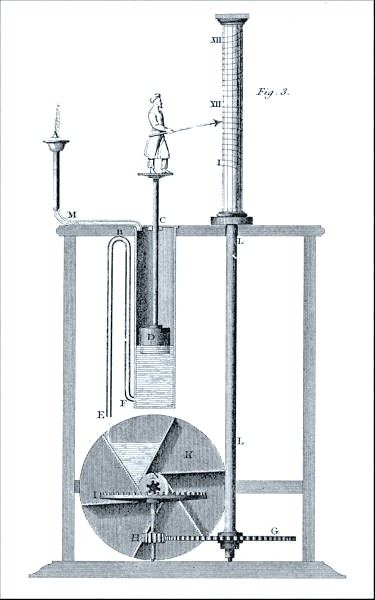

Automation, as the implementation of processes to perform activities towards a goal without human assistance, has been a key contributor to the evolution of humanity, saving us time, costs and opening playgrounds outside of human physical capabilities. From the first documented automation control systems in Ptolemaic Egypt 270 BC, water clock float regulator in Figure 1, we can fast-forwarding into the 17th century, when pressure, temperature, and speed regulators are marking the beginning of the control theory, become mathematically conceptualized in the 19th century and applied in the microelectronic digital systems in the 20th century.

Today, we are living in times of continuous evolution of automation, where a broad range of systems is becoming directly connected to the internet, operational context of simple devices grows wider, and complexity of autonomous behaviors keeps increasing. Current automation challenges consist of having all actors in the system to connect, providing their real-time operational data, which we need to collect, and reason how to control the system to achieve the goals efficiently and reliably.

Leaving aside the challenges of real-time connectivity and data collection, in this article, we focus on adopting AI techniques for the reasoning and control of complex dynamic systems, capturing tacit and explicit knowledge often spread a across multitude of sources, including different forms of documentation, historical operational data, human domain experts and models tailored for certain configurations and subsystems. We introduce some of the key building blocks that automation systems can be built upon: AI Planning, which provides us with plans how to achieve the goals; automated diagnosis, which helps with understanding situations in the world we have not encountered before; behavior trees, which enable us to capture the actions we want to execute and how to act when their execution does not go as expected; and reinforcement learning which helps to build the models of the world with partial or no human assistance.

What is AI?

The concept of AI has been grabbing attention for almost a century across literature, mathematics and industrial applications. One can choose the preferred definition, personally, I find the following definitions attractive:

- Artificial intelligence is a computerized system that exhibits behavior that is commonly thought of as requiring intelligence. NSTC

- The science of making machines do things that would require intelligence if done by men. Marvin Minsky

- The study of how to make computers do things at which, at the moment, people are better.1

These days, new AI techniques are quickly adopted, categorized and consequently often loose the “AI” label, such as the first experiments with artificial neural networks focused on character recognition, which we can do quite well these days, but label it optical character recognition (OCR) instead. While the ultimate goal of Artificial General Intelligence (AGI) remains in a distant future, we can consider the AI label to represent the current technological frontier and in this article, without overusing the label, we describe an approach how to build a general hierarchical autonomous control architectures, combining well-known techniques from satisfiability theory, automated planning, automated diagnosis, constraint programming, reinforcement learning and behavior trees.

Planning—the art of thinking before acting

Since one of its first formalizations in Stanford Research Institute Problem Solver (STRIPS) 1971, planning, as the reasoning about action and change, has been a key field of AI. The simplest (classical) planning problem consists of variables, actions, initial state and a goal state:

- V. Set of variables (winch_busy, motor_running). These variables define the space of all possible instances of the world (states) we can encounter.

- A. Set of actions, where precondition and effects of each action are a partial assignment to the variables. For example, action start_motor can have precondition: motor_running is ‘false’, and effect: motor_running is ‘true’.

- I. Initial assignment to the variables (winch_busy is ‘false’, motor_running is ‘true’). The initial assignment can typically represent the current observation of the world, from which we would like to achieve a goal.

- G. Expected partial assignment to the variables (logging_done is ‘true’ motor_running is ‘false’).

While the classical models are simple, they have been extended in various directions, notably:

- partial observability (we do not always know the initial assignment to the variables),

- non-determinism (actions may not always succeed),

- explicit time (actions need to overlap),

- and continuous numeric variables (e.g. modeling energy, capacity, …).

Consequently, the modeling becomes expressive enough to subsume large portion of the practical decision-making problems, where the typical applications of planning consist of the following steps:

- Build a planning domain capturing the knowledge about the application (V + A), using language such as Planning Domain Definition Language (PDDL).

- Choose a domain-independent planner expressive enough for the created domain, such as POPF.

- Build a projection from the observed world into the language of the domain, which allows us to generate state I whenever needed.

- Observe the initial state of the world I, define goal of planning G, then feed V+A+I+G to the planner, which provides a set of actions (plan P) how to achieve the goal G starting from state I.

- Execute actions in the plan P.

We can observe that while steps 1+2+3 represent the development of planning-based-automation, steps 4+5 represent the deployment of automation in production. Step 3 often contains complex subproblems such as processing high-dimensional data (classification of images), yet, step 1 tends to consume most of the development efforts—models are traditionally built by human domain-experts and research in reinforcement learning and automated domain synthesis may simplify the model construction in the near future. The dominant paradigm of planning has been the domain independence, where planners (step 2) are intended to act as general solvers and they are developed without the knowledge of the future domains they can be used for, being expected to be efficient at solving problems from any domain.

Diagnosing—understanding failures

Real-world systems naturally include scenarios where an unexpected event leads to a failure that requires either automated or human-assisted recovery. While planning is mainly concerned with finding a set of actions from the current state of the world to the goal state, we can use model-based diagnosis for understanding and explanation of why we cannot find a plan from the current state. A common approach to model the consistency of a state of a system is to define a set of rules R that encode the atomic pieces of knowledge about the system, then we say that R is consistent with regard to state S if all the rules R are satisfied. If R is inconsistent with regard to state S, we use conflicts and diagnosis to explain why:

- Minimal conflict C ⊆ R is a set of rules such that C is inconsistent with regard to S and any strict subset of C is consistent with regard to S.

- Minimal diagnosis D ⊆ R is a set of rules such that R \ D is consistent with regard to S and for any strict subset D’ ⊂ D, R \ D’ is inconsistent with regard to S.

As an example, we can think about evaluating if Covid-19 restrictions are being followed for an observed patient. Figure 2 demonstrates that we find a minimal conflict, showing us the chain of necessary implications that has been broken, and one of the minimal diagnosis shows that if Covid-19 infected patients did not need to be quarantined, the system would become consistent again.

Since industrial automation often happens over a longer period of time of incremental adoption, it is necessary to introduce a strategy for failure management. An example would be dividing failures into categories:

- known failures have been previously identified and we can recover from them automatically,

- diagnosable failures are caught by the model-based diagnosis (hundreds of atomic formulas), human-assisted resolution of the conflicts then leads to either eliminating the failure or it becomes a known failure,

- unexpected failures may require human contribution to understanding the failure.

The effort to develop optimistic automation (aka happy paths) may often be surpassed by order of magnitude of effort to develop automation when something goes wrong. Addressing failures of the system then can be seen as an iterative development process of adding new diagnostic rules, recovery strategies and shifting as many failures as possible from the long tail of unexpected (generally infinite) to known.

Reinforcement learning—world mechanics

Reinforcement learning (RL) is a broad category of learning techniques focused on automatically building an understanding of the world (system, environment) to be able to control it to achieve a goal. Figure 3 shows the basic RL loop, when given the world and a set of actions, we are tasked with building a policy (control model) of the world by applying action to the world and receiving observations. Each observation comes with a reward and the quality of the policy is tied to the total reward the policy receives for interacting with the world.

AI planning can also be seen within the framework of reinforcement learning, e.g. classical planning can have a policy that consists of:

- finding a plan to a goal based on the current observation,

- dispatches actions according to this plan,

- having a positive reward for reaching the goal, alternatively in temporal planning, where makespan is the total time spent to reach the goal.

RL would be concerned with finding a PDDL model that maximizes the quality of the described policy, however, the scalability of techniques building PDDL automatically has not yet improved beyond very simple worlds. While the described policy parametrized by PDDL would always be explainable by the plans themselves (chain of cause-effect explanations), RL has seen a significant progress in applying deep learning (not easily explainable) for building high-quality policies for non-linear worlds, e.g. learning how to solve a Rubik’s Cube.

Building and maintaining high-quality models of the world has always been one of the most time-consuming challenges of automation and the last decade has shown that machine learning can significantly contribute and often surpass the quality of human-made models. In practical industrial applications, it is rarely computationally possible to learn a single end-to-end model, instead the end-to-end models are composed from multiple heterogenous models, where some of them can be machine-learned and some are human-built using AI and operations research modeling techniques. Most of the future advancement in automation and autonomous systems can be expected to come from the research on how to build and maintain the models automatically.

Behavior trees—the glue

Behavior trees are an execution control paradigm (comparable to state machines), which has been initially used in computer games and became popular in a wide range of robotic applications. Behavior tree is a decision-making tree that always returns either success, failure or running status. A node is a behavior tree if it has a finite number of children that are nodes. Figure 3 illustrates an example behavior when we would like to open a door, but we may need to find a key, pick it up and find the door itself. Blue nodes in the example have the following behavior:

- → evaluates to:

- success if all its children evaluate to success,

- failure if at least on node evaluates to failure, and

- running if at least one node evaluates to running while none evaluates to failure.

- ? evaluates to:

- success if at least one child evaluates to success,

- failures if all children evaluate to failure, and

- running if at least at least one child evaluates to running and none evaluates to success.

The inherent benefit of the behavior trees is that we can plug a behavior tree focused on a certain subproblem into another tree focused on a higher level problem (without caring about the details of the subproblem), hierarchically decomposing the original problem. We can also see planning and diagnosis within the behavior trees:

- Automated diagnosis acts as another node of the tree and acting on the diagnostic results can be encoded into the tree as well, using behaviors such as “if there is a single minimal diagnosis and the diagnosis rules are owned by a single service, restart the service”.

- When a planner produces a plan of actions to take from the current state, these actions form a behavior tree where each action is represented by a single node. The node’s success, failure, or running evaluation, directly reflects success, failure, or current execution of the action it represents—similarly to the given example when finding a key can fail, in which case the whole behavior tree fails.

Within the RL framework, we can see that a behavior tree is a (deterministic) policy; it observes the world with each “tick” of the tree and may send an actions, such as finding the key, which can be a machine-learned subproblem itself (policy implemented through a neural network) consisting of moving the camera until it recognizes the key in a picture.

Behavior trees are verifiable, human-readable and easy to visualize, providing a common ground for sharing ideas, architectures and domain knowledge among domain experts, product managers and AI systems.

Conclusions

Automation has and will generate immense value across all industries and human lives at a constantly accelerating rate, strongly contributed to by the broad category of technological advancement that we label today as AI, within which we have peeked under the surface of several building blocks—reinforcement learning, planning, diagnosis, and behavior trees.

In Schlumberger, AI planning has become an integral part of automation in the DrillOps on-target well delivery solution, providing and executing weeks-long plans for drilling operations and helping to drive new collaborations in the industry. In Wireline, AI planning, automated diagnosis, and behavior trees have been the main building blocks to answer the automation challenges of reasoning and control.

Author information: Filip Dvorak is a senior AI research scientist in Houston, responsible for automated decision making particularly focused on AI planning, learning, execution, diagnosis and operations research. He has received his Ph.D. in AI at Charles University in Prague and previously worked on autonomous AI applications in manufacturing and transportation.

Sources

1 Abraham Rees (1819) "Clepsydra" in Cyclopædia: or, a New Universal Dictionary of Arts and Sciences The image is the JPEG reproduction published 2007-02-01 by the Horological Foundation.