Data Science for All!

The energy industry is facing a dynamic business environment with significant economic pressures over the last few years, to say the least. It was clear to many that new digital technology would help to enable our industry to create more efficient and effective ways of working, further focusing on and fostering a culture of innovation.

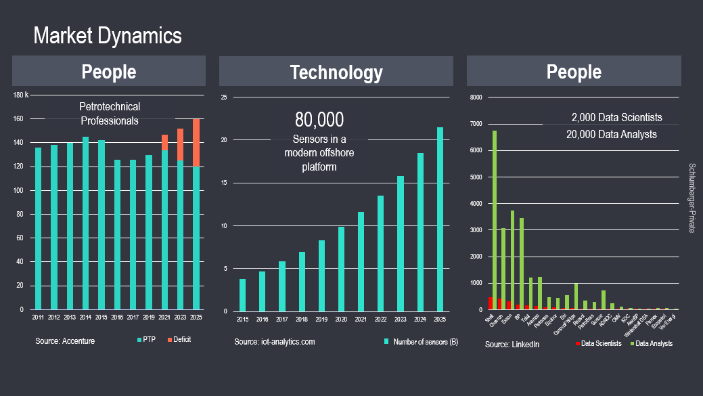

On the macro-indicator’s side, two trends are interesting when one looks at our industry: the evolution of the workforce as well as the data generation through time. Past and predicted figures are taking opposite tendencies. While the number of petrotechnical professionals has generally been shrinking for the past half-decade, pre-Covid-19 studies where forecasting further reductions for the years to come. The current situation is likely only going to exacerbate such momentum. In opposition, the amount of data generated in our industry is forecasted to increase at a near exponential rate, driven by the instrumentalization of equipment in producing fields and manufacturing facilities.

Now, if you do an empirical experiment, and search on LinkedIn for how many data scientists or data analysts a company in the E&P industry has, you may be getting an idea on how organizations have decided to tackle this problem. They seemed to a have turned towards reskilling or upskilling of their workforce in order to transform petrotechnical experts into petro-digital ones. Such trends can also be witnessed by analyzing the sponsorship some domain-experts are receiving to pursue data science degrees. Giving the former ways to manipulate new digital technologies to increase their capacity to assimilate the ever-increasing amount of information in a fraction of the time. This, to me, is a fundamental component to the digital transformation we see happening in the oil and gas (as it is happening in other industries).

Such achievements rely on:

-

the structural changes a company needs to go through, supporting the management of it, and

-

develop and deploy a digital infrastructure that will enable innovative change.

This, realistically, is a journey that cannot be undertaken selfishly. It is a journey that requires very peculiar expertise on a wide range of the digital spectrum. It also requires deeper collaboration than ever experienced before; a deeper level of trust!

Similarly, a recent survey from MIT-Sloan and Deloitte (Accelerating Digital Innovation Inside and Out) tries to isolate characteristics that define digitally mature organizations. One outstanding aspect relies on the development of a digital ecosystem. A permeable environment that stimulates innovative thinking by osmosis with relevant third parties. This conclusion was believed to be true in Schlumberger almost a decade ago and led to the construction of new digital technology centers: the STIC in Palo-Alto California (Software Technology Innovation Center) and the SLIIC in Houston Texas (Schlumberger Limited Industrial Internet Center), tasked to work with leading enterprises in their field and to build up expertise.

As a result, the organization further crystalized around strong internal digital platforms focusing on the development of a new ecosystem of solutions. The external and commercial incarnation of it is the DELFI cognitive E&P environment.

“Artificial intelligence is as important for our future as the surge in oil exploration. It will change the order of magnitude in the size of our business”, Jean Riboud, former CEO Schlumberger, speaking at the New York Society of Security Analysts in 1980.

Artificial intelligence (AI) encapsulates concepts that have been available for many decades, and though few people sensed its potential and the impact it can have in our industry almost half a century ago, as stated by Jean Riboud in 1980, only recent advancements in HPC have enabled these solutions to become accessible and available to all.

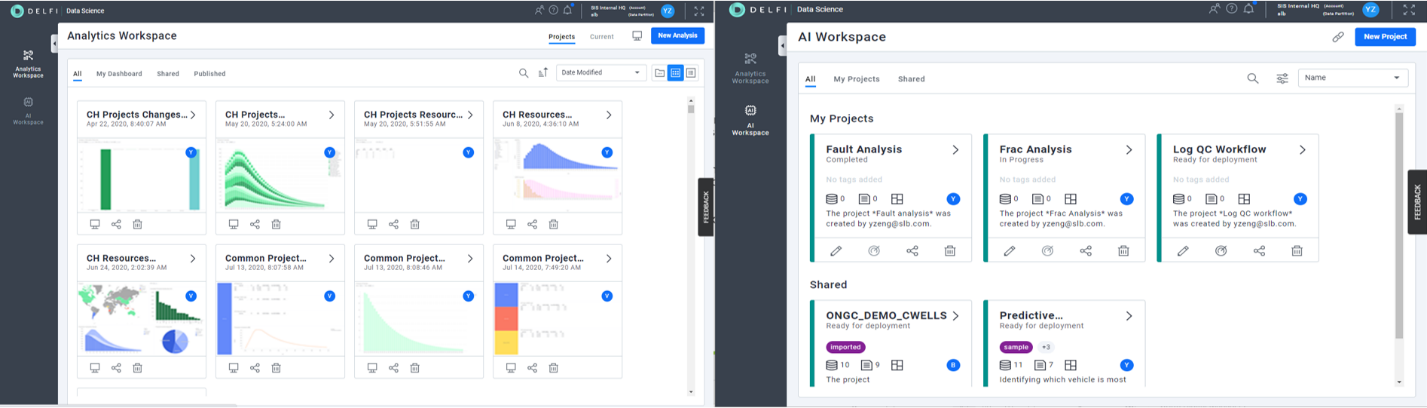

However, for AI to thrive, it must be effectively usable by our petrotechnical professionals, so they can realize its value and let their creative minds do the rest. It is not practically possible to develop Python or R expertise in the entire PTE community. Hence, this is analogous to the times when geologists were given statistical tools to manipulate (e.g. variograms, gaussian functions, normal transformation…) to better model the subsurface. Ease-of-use and accessibility thus became of paramount importance when the data science capabilities were put into the DELFI environment.

TTypically, the use of machine learning (ML) is limited to data scientists due to the complexity in building and managing models. But organizations realized quickly that a tight collaboration between domain experts and data scientists often leads to faster and more pertinent results. The need for a common platform where both of them can collaborate and speak the same language became obvious. A visual modeling tool fits perfectly well here, one that uses visual workflows providing a common understanding for both data-scientists and domain experts.

Further, the need for having a ‘no code’ and ‘low code’ environment was apparent to allow engineers to create end-to-end machine learning projects for simple cases (like supervised facies classification etc.). Thus, starting with a simple visual modeling tool for a no code to low code environment provides a first step to graduate from petrotechnical to petro-digital expertise. Once the PTEs master these digital tools, the transition to more sophisticated ones like Deep Learning or NLP will become natural. To achieve this, it is essential to avoid creating any new siloes and to break the existing ones down, and this has been the mission behind the embodiment of technologies from Google, Microsoft, Dataiku, TIBCO in the DELFI environment.

Digitalization and AI impact businesses across the spectrum of commercial activity. Within the oil and gas industry we have found the following to be particularly relevant:

-

Our industry has long and complex planning cycles. AI allows for a significant reduction in this cycle time by intelligently automating various manual tasks and providing advisory systems for complex workflows.

-

In the production and drilling domains, data is often captured in real time and requires an immediate response. We need to monitor status, optimize operation, detect anomalies, respond and plan for interventions often in near real-time.

-

The nature of the industry, often having to operate in harsh conditions, show critical dependency upon expensive and complex equipment with limited and predictable downtime. Downtime is very costly and can be dangerous. ML is helping optimize the monitoring and maintenance schedules to maximize uptime.

-

Future prediction of shortfall in expertise and resources coupled with cost pressures. For example, many oil companies are cutting their budgets in response to the Covid-19 pandemic. Applying digital and ML/data science can help them quickly identify possible options and ranked scenarios.

-

When a crisis happens, typically a major unexpected event such as a hurricane or accident. It is important to access and analyze a comprehensive range of data quickly, with the aim of looking at the situation from many perspectives to find options that lead to the most appropriate decision.

These goals are not set for a distant future, but already maturing and delivering concrete solutions:

-

In seismic processing, cycle times have been reduced from 13 months to 2.5 months.

-

In well intervention planning, workflows have been reduced by 80%

-

Structural interpretation has been accelerated down to at least one fifth of the time

-

In field development planning, one can evaluate 14 times the number of scenarios, three times faster.

-

Reservoir engineers are able to complete their work, not in 120 days, but in three days

-

Well construction time cut in half

But this is not only about providing off-the-shelf solutions in a generic fashion. It is also about empowering people across the industry by lowering the data-science entry point. Geoscientists, and engineers, should be able to manipulate data, slice it and dice it comfortably; and have solutions to easily visualize, analyze, monitor such data. And finally have the capacity to infer new data, build relationships and find themselves ways to automate further some of the tasks they find redundant.

The DELFI Data Science profile will help domain experts realize their own creative thinking. This can be accelerated by framing a fertile and focused environment around it in the form of a Digital Innovation Lab, bridging expertise between the user and Schlumberger, supported by all the technology available, to unlock new levels of magnitude in efficiency and performance.

Sampath is managing the DELFI Data Science product development in Schlumberger. Understanding and driving business requirements is one of his primary functions along with supporting on-the-ground teams in various client projects, demos, and proposals. A data science enthusiast with a combined development and domain background, he also works on various prototypes for integrating AI technologies with domain workflows.

Sampath joined Schlumberger India in 2012 after completing a masters degree in geology from the Indian Institute of Technology. During the past eight years, he has worked in various business lines of geoscience, development, data management and DELFI business across various locations.

Jimmy is responsible for fostering, promoting and funneling digital innovation projects in Schlumberger. His role is to ensure emerging technologies; combining cloud-based solutions, artificial intelligence, edge compute, and various other interesting pieces of tech, are being evaluated and integrated into the wider exploration to production workflow with maximum return on investment. He joined the oil and gas industry in 2008 after graduating from IFP and the University of Strasbourg with degrees in petroleum geosciences and geophysics respectively. He then held various positions in the Schlumberger software product-line, from product ownership for the development of various parts of the subsurface characterization workflow, to service delivery management for the Scandinavian business-unit.