Production Operations – the imperatives to evolve, and an extraordinary new world of opportunity

Schlumberger has over two decades of experience developing and implementing digital oilfield technologies and processes. Our singular goal has always been to create value by overcoming the complex challenges our clients face.

During that time, Schlumberger has matured and evolved technologies by strengthening existing domain-based data and simulation-modeling capabilities and incorporating the latest value-adding advancements. The pace of change in the industry has accelerated in recent years, with a new breed of data-centric digital technologies that enable faster response to increasingly complex and dynamic challenges.

There are many facets to these challenges. Operating producing assets is inherently complex, and oilfields are dynamic, ever-changing, and sometimes unpredictable. Operating conditions, fluid properties, and flow regimes change continuously. Each day, new events or issues, whether identified and diagnosed or not, will have evolved or developed. Equipment will have degraded just that little bit more, and the risk of failure will have increased. Many of these variables are inter-related and combine to impact production performance adversely unless action is taken. To secure safe, efficient, and effective production operations, decisions must be taken continuously to drive actions and results. The timing and quality of these decisions is everything.

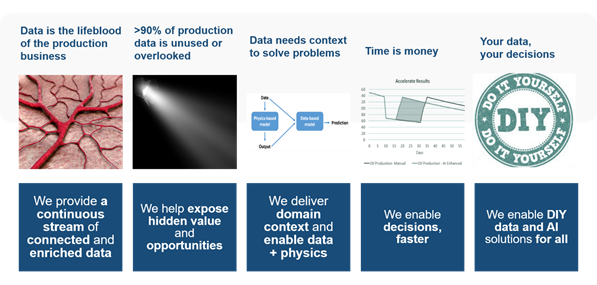

Production data–critical, challenging, underused, and bursting with potential

Data drives oilfield decisions. Many traditional digital oilfield solutions used to manage data were developed in the 1990s. During that period, I cut my teeth in data management in the industry–loading data and doing my very best not to break the customer’s subsurface and production applications and databases I was tasked with supporting. Those early years taught me four fundamental things:

-

Data by itself is rather useless. It needs context and domain know-how to understand it and extract value from it.

-

Those databases were built to contain data. It was hard (and sometimes painstaking) work to access data and move it to another database or system.

-

Bringing data together from various sources, often a mix of proprietary and vendor databases and files, was difficult to reconcile given the lack of consistency in data frequencies, units, custom properties, and sometimes multiple versions of the data. As a result, the possibility of losing meaning and compromising data quality was high.

-

There is no shortage of data, even before the advent of instrumented oilfields. Most of the data was either used for one purpose only or never used at all.

The world of on-premise data integration and management has not significantly evolved since the 1990s. However, the fundamental principles still hold, and the same problems remain. Engineers and petrotechnical experts (PTEs) are challenged with analyzing data, and anyone making business decisions is impacted most of all.

In the past couple of years, I have been lucky enough to be involved in the revealing process of conducting customer interviews. These interviews almost universally reference a pervasive lack of trust in data and a lack of confidence in operational decisions. Decisions that may have multimillion dollars of impact. The root cause of this lack of certainty lies in the way data is managed. Bringing data together, accessing it, and sharing it isn't easy. The whole process is also unduly time-consuming, with as much as 80% of an engineer’s day spent gathering and manipulating data. It’s like engineers working in a heavy fog. It’s rare to have clarity–without a lot of preparation–that the data is the correct version, that it’s up-to-date, and representative of the latest operating conditions.

The fog also permeates asset management decisions, with asset managers struggling to see a clear big picture, relying on the input of team members who themselves may not be fully and confidently informed. Adding to the burden and complexity of managing data, engineering analysis processes may be difficult to repeat, and engineering bias prevails.

If you split your best engineers into two rooms to solve the same problem, they will come up with different answers. The results are affected by the engineer’s prior experience and, in some cases, by gut feel rather than an analytical basis. There’s also the issue that there simply isn’t the time and means necessary to extract the maximum value, or sufficient insight, from the data.

In addition to local challenges, many macro-level challenges have always affected the production business. The coronavirus pandemic has heightened these challenges–underlining the need to be flexible and agile for operating companies to survive, let alone thrive during this era of profound change including the pressure to minimize carbon footprint the trajectory we’re on toward automated oilfields.

These challenges have two fundamental components: 1) Data drives the decision-making processes related to these challenges, and 2) These challenges are enterprise-scale. They need enterprise-scale solutions.

To thrive as an industry, we must improve returns through capital efficiencies, grow earnings with cost efficiency, and meet the dual challenge of producing more energy while emitting less carbon. Digital technologies, including AI and automation, hold great promise, and value is being realized from them today. The challenge is attaining that value at scale, beyond isolated use cases, to deliver impact and efficiency across customer organizations and the industry.

The new way

We’ve learned from the experience we’ve gained in data management of the digital oilfield. We’ve learned from our customers to know that software is not one size fits all. To solve problems consistently and effectively, we’ve learned that what’s needed is to mold software technology to the workflow or need, not the other way around.

We’ve built upon our rich heritage of digital oilfield domain simulation experience to develop digital solutions with the flexibility to fit to people, workflows, and processes. We leverage best-in-breed digital technologies to release significantly more value from operational data to make better decisions, faster.

We now have the means to act at lightning speed. It’s all about how effectively and quickly an algorithm or model can detect, analyze, and optimize. That means near-instantaneously–in real-time, thanks to the speed and power of workflow automation and the edge compute and AI optimization capabilities of Agora* edge AI and IoT solutions and the optimized automation and control capabilities of Sensia.

There’s an increasing focus on enterprise-level solutions for operating companies. In part, this comes from the simple fact that the enterprise is where at-scale solutions are delivered. It also stems from the fact that enterprise-level solutions are the only way to build up a truly holistic view of the business, develop cross-domain workflows and break down those silos, where decisions are taken in isolation, without regard to the impact elsewhere. After all, the reservoir-production system is a single connected system. So we must remove the barriers around data to take decisions and actions to benefit the system as a whole.

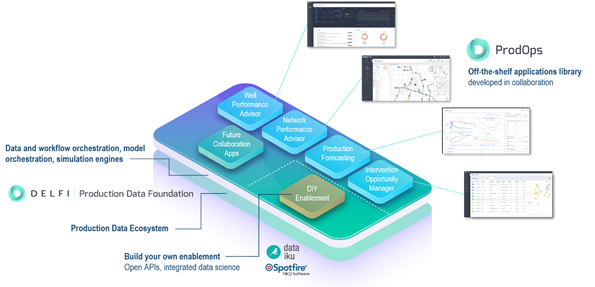

Our approach to transforming enterprise production operations is embodied in DELFI Production Data Foundation and the ProdOps tuned production operations solution. This new breed of cloud-native solutions utilizes advanced digital technology and embraces the learnings from the past 20 years. Already field-proven, we continuously expand both solutions in conjunction with clients from around the world. These solutions are fast, flexible, highly scalable, and transformative.

Production Data Foundation provides enterprise data integration and access from cloud and on-prem sources, providing a live stream of contextualized data that can be used to generate insights from integrated data analytics and AI. Production Data Foundation underpins apps that generate automated advisory guidance and the means to perform deep analysis, equipping engineers and asset managers to make higher quality and better-timed decisions to enhance production performance.

Both solutions enable our customers, and third parties, to design, build, operationalize, and maintain their own intellectual property–from the incorporation of a single existing workflow to the development of entirely new full-blown AI-fueled apps. Solutions that can scale to extract maximum value for customers, built on an extensible and future-proofed foundation to accelerate their digital transformation journey.

The impact on performance from the ProdOps solution and Production Data Foundation is profound, with early adopter customers already claiming extraordinary gains, with time and cost savings of nearly 90%. Even more impressive is the 1.5% overall production increase reported by one customer directly attributed to these solutions. That may sound modest, but at today’s prices, we are talking about additional sales revenue in the order of USD 150 million for the customer. And that’s just in year one. I did say extraordinary, didn’t I

Author information: Colin has 25 years in Oil & Gas with a background in data management, geoscience, project management, petroleum engineering and upstream technology, while working with customers on all continents. Colin has a Bachelor’s degree in Land Economics, Master's degree in Petroleum Engineering and Project Management, and is currently based in Houston, Texas.

LinkedIn profile: https://www.linkedin.com/in/smithcjb

Disclaimer: All opinions expressed by the blog contributors are solely their current opinions and do not reflect the opinions of Schlumberger or its affiliates. The blog's opinions are based upon information they consider reliable, but neither Schlumberger nor its affiliates warrant its completeness or accuracy, and it should not be relied upon as such.